Next Generation Lakehouse: New Engine for the Intelligent Future | Apache Hudi Meetup Asia Recap

This blog was translated from the original blog in Chinese.

Recently, the Apache Hudi Meetup Asia, hosted by JD.com, was successfully held at JD.com Group headquarters. Four technical experts from Onehouse, JD.com, Kuaishou, and Huawei gathered together, not only bringing a preview of Apache Hudi release 1.1, but also sharing their unique approaches to building data lakehouses. From AI scenario support to real-time data processing and cost optimization, each topic directly addressed the pain points that data engineers care about most.

Hudi Community Leader Joins Remotely

First, Vinoth Chandar, CEO & Founder of Onehouse and Apache Hudi PMC Chair, delivered the opening remarks via video. He stated that after eight years of development, Hudi has become an important cornerstone in the data lake domain, and its vision has transformed into widely recognized achievements in the industry. The 1.0 version released last year marked the project's entry into a mature stage, bringing many database-like capabilities to the lakehouse.

Currently, the community is steadily advancing the 1.x series of versions, focusing on improving Flink performance, launching a new Trino connector, and enhancing interoperability through a pluggable table format layer. Facing the rapid development in the data lake field, Vinoth emphasized that excellent technology and robust design are the keys to long-term success. Hudi has now achieved many capabilities that commercial engines have not been able to deliver, thanks to its intelligent and creative community. Looking ahead, the community will be committed to building Hudi into a storage engine that supports all scenarios from BI to AI, exploring trending areas including unstructured data management and vector search.

Vinoth specially thanked JD.com for its significant contributions to Apache Hudi. Among the top 100 contributors, 6 were from JD.com. Finally, he also invited more developers to join this vibrant community to jointly promote innovation and development in data infrastructure.

JD Retail: Data Lake Technical Challenges and Outlook

As the co-host of the event, Zhang Ke, Head of AI Infra & Big Data Computing at JD Retail, welcomed guests and attendees who participated in this Meetup. He also pointed out two core challenges facing the data domain:

At the BI level, the long-standing problem of "unified stream and batch processing" has not yet been perfectly solved, forcing data R&D personnel to duplicate work across multiple systems. This requires fundamentally reconstructing the data architecture and finding a new paradigm for unified stream and batch processing.

At the AI level, with the arrival of the multimodal era, traditional solutions that only handle structured data can no longer meet the needs. Whether it is data supply efficiency for model training, real-time feature computation for recommendation systems, or knowledge base construction required for large models, there is an urgent need for an underlying support system that can unify storage of multimodal data while balancing cost and performance.

The industry is looking forward to building a storage foundation through open-source technologies like Apache Hudi that can uniformly carry batch processing, stream computing, data analysis, and AI workloads.

Apache Hudi 1.1 Preview and AI-Native Lakehouse Evolution

In the session "Apache Hudi 1.1 Preview and AI-Native Lakehouse Evolution," Ethan Guo (Yihua Guo), Data Architecture Engineer at Onehouse and Apache Hudi PMC member, shared Hudi's technical evolution path and future outlook. As the top contributor to the Hudi codebase, he systematically elaborated on the project positioning, version planning, and AI-native architecture.

Ethan pointed out that Apache Hudi's positioning goes far beyond being an open table format—it is an embedded, headless, distributed database system built on top of cloud storage. Hudi is moving from "a transactional database on the lakehouse" toward "an AI-native Lakehouse platform."

In the then-upcoming 1.1 release (now released), Hudi has achieved several important breakthroughs. Among them, the pluggable table format architecture effectively solves the pain point of format fragmentation in the current data lake ecosystem, enabling users to "write once, read in multiple formats." At the same time, Hudi has deeply optimized Flink integration, solving the throughput bottleneck in streaming writes through an asynchronous generation mechanism, and building a brand-new native writer that achieves end-to-end processing from Avro format to Flink RowData, significantly reducing serialization overhead and GC pressure. Real-world tests showed that Hudi 1.1's throughput performance in streaming lake ingestion scenarios was 3.5 times that of version 1.0.

Facing new challenges brought by the AI era, Hudi is actively building a native AI data foundation. By supporting unstructured data storage, optimizing column group structures for multimodal data, providing built-in vector indexing capabilities, and building a unified storage layer that supports transactions and version control, Hudi is committed to providing highly real-time, traceable, and easily extensible data support for AI workflows. This series of evolutions will propel Apache Hudi from an excellent data lake framework to a core data infrastructure supporting the AI era.

Latest Architecture Evolution of Apache Hudi at JD.com

In the session "Latest Architecture Evolution of Apache Hudi at JD.com," Han Fei, Head of JD Real-time Data Platform, systematically introduced the latest architectural evolution and implementation results of Hudi in JD's production environment.

Addressing the performance bottleneck of native MOR tables in high-throughput scenarios, JD's Data Lake team reconstructed the data organization protocol of Hudi MOR tables based on LSM-Tree architecture. By replacing the original "Avro + Append" update mode with "Parquet + Create" mode, lock-free concurrent write capability was achieved. Combined with a series of optimization methods such as Engine-Native data format, Remote Partitioner strategy, and streaming incremental Compaction scheduling mechanism, read and write performance were significantly improved. Benchmark test results showed that the MOR-LSM solution's read and write performance was 2-10 times that of the native MOR-Avro solution, demonstrating significant technical advantages.

Facing the growing near-real-time requirements of BI scenarios, streaming dimension widening had gradually become a common challenge for multi-subject domain data processing. Traditional Flink streaming Join had problems such as state bloat and high maintenance complexity. JD's Data Lake team, drawing on Hudi's partial-update multi-stream splicing approach, built an indexing mechanism that supported primary-foreign key mapping. This mechanism efficiently completed streaming dimension association and real-time updates through the coordinated operation of forward and reverse indexes. At the same time, pluggable HBase was introduced as index storage, ensuring high-performance access capability in point query scenarios.

In exploring AI scenarios, the team designed and implemented the Hudi NativeIO SDK. This SDK builds four core modules: data invocation layer, cross-language Transformation layer, Hudi view management layer, and high-performance query layer, creating an end-to-end process for sample training engines to complete training directly based on data lake tables.

JD had deeply integrated these capabilities with business scenarios, applying them to the near-real-time transformation of the traffic data warehouse ADM layer. After a series of optimizations, the write throughput of the traffic browsing link increased from 45 million per minute to 80 million, Compaction execution efficiency doubled, and real-time consistency maintenance of SKU dimension information was achieved, completing a comprehensive transformation from T+1 offline repair mode to real-time processing mode.

While promoting self-developed technology, JD also actively gave back to the open-source community, with a total of 109 contributed and merged PRs. In the future, the team will continue to deepen Hudi's application in the real-time data lake domain, providing stronger data support capabilities for business innovation.

How Kuaishou's Real-time Lake Ingestion Empowers BI & AI Scenario Architecture Upgrade

In the session "How Kuaishou's Real-time Lake Ingestion Empowers BI & AI Scenario Architecture Upgrade," Wang Zeyu, Data Architecture R&D Engineer at Kuaishou, introduced Kuaishou's complete evolution path and practical experience in building a real-time data lake based on Apache Hudi.

For traditional BI data warehouse scenarios, Kuaishou achieved an architecture upgrade from Mysql2Hive to Mysql2Hudi2.0. By introducing Hudi hourly partition tables, supporting multiple query modes such as full, incremental, and snapshot, and innovatively designing Full Compact and Minor Compact mechanisms to optimize data layout, Kuaishou improved the overall architecture. The introduction of bucket heterogeneity allowed full partitions and incremental partitions to support different bucket numbers, significantly reducing lake ingestion resource consumption. Compared with the original architecture, the new solution naturally supported long lifecycles and richer query behaviors. While reducing storage costs, it achieved a leap in data readiness time from day-level to minute-level.

At the AI storage architecture level, Kuaishou built a unified stream-batch data lake architecture, solving the core pain point of inconsistent offline and real-time training data. Through unified storage media, support for unified stream-batch consumption, logical wide table column splicing, and other capabilities, unified management and efficient reuse of training data were achieved. The metadata management mechanism based on Event-time timeline not only ensured data orderliness but also guaranteed real-time write performance through lock-free design.

In the future, Kuaishou will continue to improve the data lake's service capabilities in training, retrieval, analysis, and other multi-scenarios, promoting the evolution of the data lake toward a more intelligent and unified direction. Kuaishou's practice fully proves that the real-time data lake architecture based on Hudi can effectively support the modernization and upgrade needs of large-scale BI and AI scenarios.

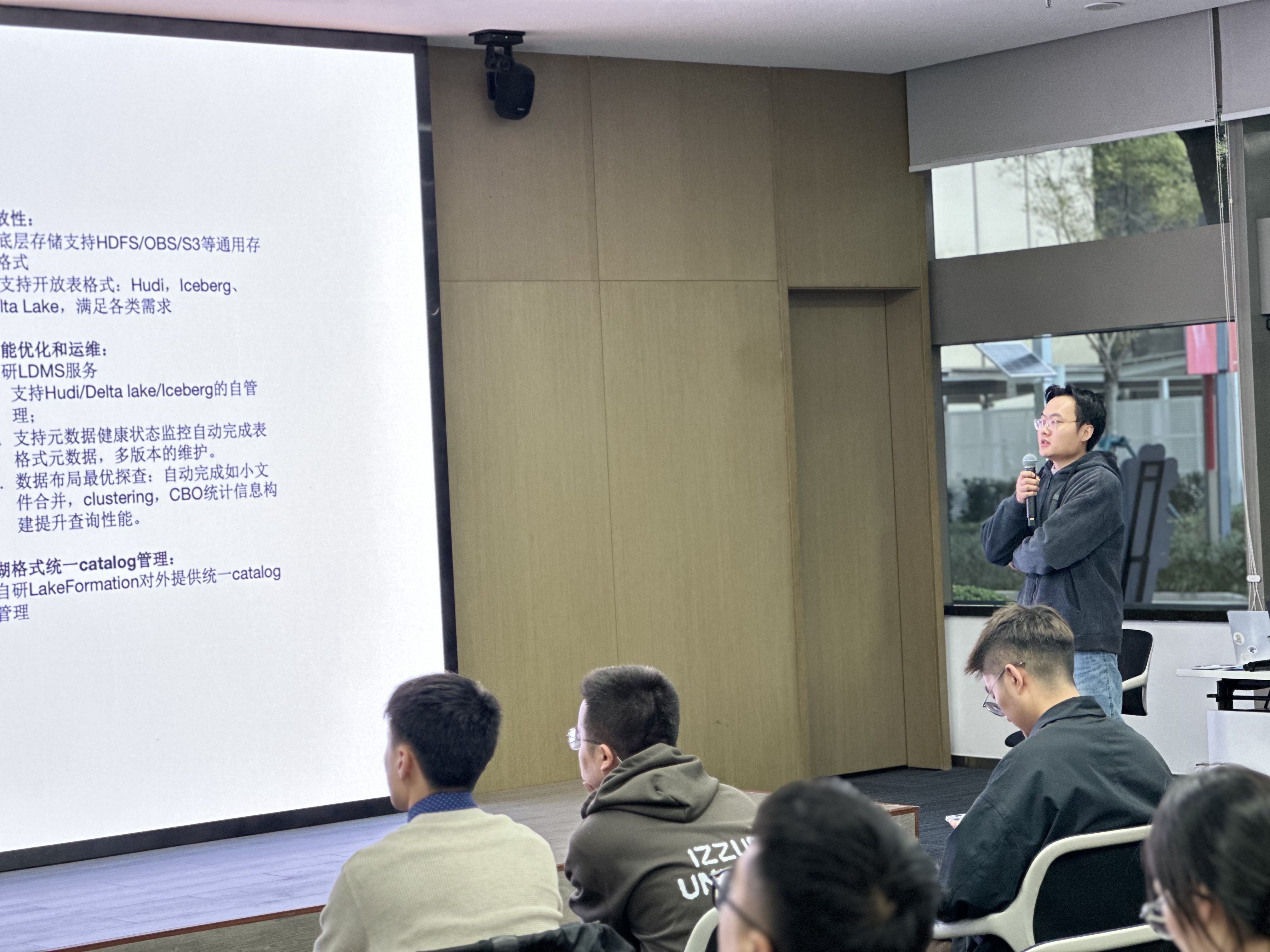

Deep Optimization and AI Exploration of Apache Hudi on Huawei Cloud

In the session "Deep Optimization and AI Exploration of Apache Hudi on Huawei Cloud," Yang Xuan, Big Data Lakehouse Kernel R&D Engineer at Huawei, shared Huawei Cloud's technical practices and innovative breakthroughs in building a new generation Lakehouse architecture based on Apache Hudi. Facing challenges in real-time performance, intelligence, and management efficiency for enterprise-level data platforms, Huawei conducted in-depth exploration in three dimensions: platform architecture, kernel optimization, and ecosystem integration.

At the platform architecture level, Huawei developed the LDMS unified lakehouse management service platform, achieving fully managed operation and maintenance of table services. Through core capabilities such as intelligent data layout optimization and CBO statistics collection, this platform significantly reduced the operational complexity of the lakehouse platform, allowing users to focus more on business logic rather than underlying maintenance.

In terms of kernel optimization, Huawei made multiple deep modifications to Apache Hudi. Through de-Avro serialization optimization implemented via RFC-84/87, Flink write performance improved 1-10 times while significantly reducing GC pressure; the innovative LogIndex mechanism effectively solved the streaming read performance bottleneck in object storage scenarios; dynamic Schema change support made CDC lake ingestion processes more flexible; and the introduction of the column clustering mechanism provided a feasible solution for real-time processing of thousand-column sparse wide tables.

Hudi Native built a high-performance IO acceleration layer by rewriting Parquet read/write logic using Rust and adopting Arrow memory format to replace Avro. By providing a unified high-performance Java read/write interface through JNI, it achieved seamless integration with compute engines such as Spark and Flink, laying a solid foundation for future performance breakthroughs.

In ecosystem integration and AI exploration, Huawei built a management architecture supporting multimodal data. By using lake table formats to manage metadata of unstructured data, with actual files stored in object storage, it ensured ACID properties while avoiding data redundancy. At the same time, it integrated LanceDB to provide efficient vector retrieval capabilities, providing comprehensive data infrastructure support for AI application scenarios such as document retrieval and intelligent Q&A.

Conclusion

This meetup made us believe that the vast ocean of data lakehouses could not be separated from the "collective effort" of the open-source community and enterprises. Those technologies tempered on the business battlefield ultimately gave back as nutrients nourishing the entire ecosystem. This may be the purest romance of technology: making complex things simple and making the impossible possible. The road ahead is full of imagination, and together, we are shaping a more elegant and powerful future for data processing.